Agentic AI is changing how work gets done — LLM gateways help enterprises keep it scalable, safe and cost-effective.

The automation technology landscape has evolved from rule-based automation to machine learning to generative AI to agentic AI. Agentic AI has emerged as one of the frontier technologies that can help enterprises achieve breakthroughs and realize value across the organizational value chain. As we move from single agents to multi-agent systems, organizations can accomplish much more complex workflows quickly and efficiently.

What sets agentic AI apart is its ability to perform complex, multi-step tasks autonomously. This trend matters because agentic AI will reshape how work gets done and organizations need to adapt to a future where their employees must work with AI agents as their co-workers. As with any emerging technology architecture, agentic AI poses unique challenges to engineering teams to build, scale and maintain. The article outlines a platform-centric approach to building and deploying agentic applications at scale, based on experience working with practitioners across several Fortune 500 enterprises.

Core components of agentic AI use cases

Most agentic AI use cases have at least four core components that need to be stitched together:

- Prompts. Sets the goal or the core functionality for the workflow that the agent is expected to accomplish.

- MCP servers. The emerging protocol that connects AI agents to external data sources or services.

- Models. Used for specific functions and could be an LLM or a tuned model.

- Agents. Different types of agents, such as reactive agents, a deliberative agent, a learning agent or an autonomous agent.

Challenges across the core components

Engineering teams who build agentic applications experience significant effort and time to scale, support and maintain these components due to several factors listed below.

Prompts

- Lack of version control

- Absence of standardized testing

- Portability across models

Models

- Complexity of self-hosting

- High latency and costs

- Complexity in scaling infrastructure for fine-tuning

- Risk of vendor lock-in

- Single point of failure if dependent on a single model provider

Tools

- Hosting and discovery of MCP servers

- Proliferation of custom tools

- Weak access and policy controls

- Lack of multi-model support

- Inconsistent observability standards

Agents

- Debugging challenges

- Hosting: backend, memory, frontend, planner, etc.

- State/ Memory handling issues

- Security gaps

The above list highlights the considerations while implementing Agentic AI at scale, to balance between costs, performance, scalability, resiliency and safety.

A platform approach to address these challenges

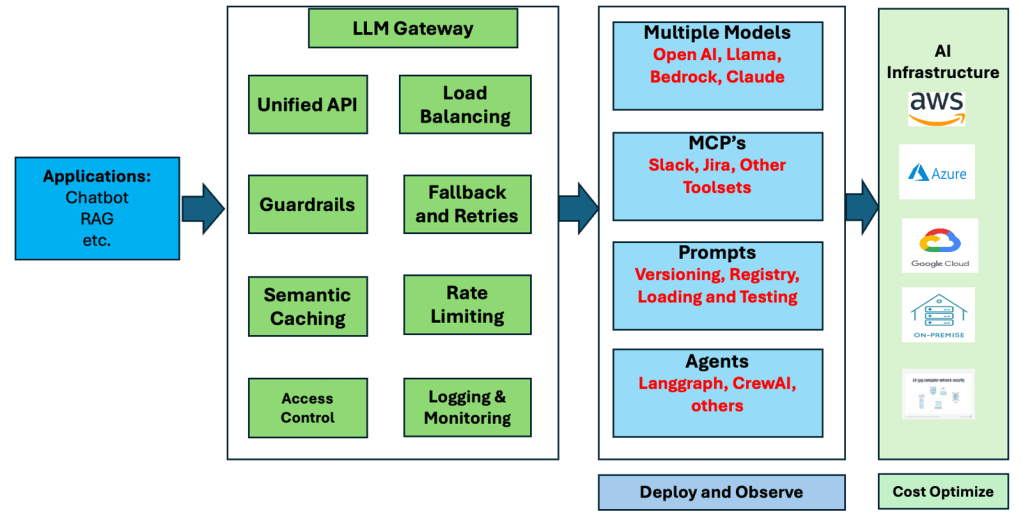

As AI and agentic AI applications become a foundational capability for enterprises, these have to be treated as mission-critical systems. AI Engineering leaders within enterprises are starting to put these components together using a platform approach with an LLM gateway (also known as an AI gateway) acting as the central control panel to orchestrate their workloads across models, MCP servers and agents. In addition, an LLM gateway helps implement guardrails, provides observability, acts as a model router, reduces costs and offers a host of other benefits.

In other words, an LLM gateway helps with increasing resiliency and scalability while reducing costs. Given that most enterprises are moving towards a multi-model landscape, LLM Gateways will play a critical role in increasing reliability, mitigating risks and optimizing costs according to industry analysts and experts.

A recent Gartner report titled ‘Optimize AI costs and reliability using AI gateways and model routers’ summarizes the trend in the following way: “The core challenge of scaling AI applications is the constant trade-off between cost and performance. To build high-performing yet cost-optimized AI applications, software engineering leaders should invest in AI gateways and model routing capabilities.”

Industry insiders such as Anuraag Gutgutia, COO of Truefoundry, (an AI platform company), say “Organizations, particularly the ones that operate in a highly regulated environment, should realize that the absence of such a platform exposes them to several risks across the layers. The lack of a robust LLM gateway and a deployment platform on day 1 has a significant impact on speed, risk mitigation, costs and scalability.”

For example, there is a risk of PII leakage externally if guardrails are not implemented. Model upgrades could take weeks without an LLM gateway’s unified API’s. Deployment of each of the components could be broken or siloed, causing re-work and delays without a deployment platform capability.

Features of an LLM gateway

A LLM gateway, in its basic form, is a middleware component that sits between AI applications and various foundational model providers. It acts as a unified entry point, much like an API gateway that routes traffic between the requester and the backend services. However, a well-architected LLM Gateway offers many more features by abstracting complexity, standardizing access to multiple models and MCP servers, enforcing governance and optimizing operational efficiency. Support for MCP servers enables AI agents to discover, authenticate and consistently invoke enterprise tools and functionality.

The diagram below provides a view of a typical gateway:

Hari Subramanian

Key architectural considerations and benefits

The key architectural considerations for a best-in-class gateway should be high-availability, low latency, high throughput and scalability.

A deployment platform with a central LLM gateway can provide several benefits to the enterprise, such as:

- Unified model access. A single endpoint to access multiple LLMs — open source or closed source, hosted on-premise or with a hyperscaler.

- Routing and fallback. The gateway can route workloads based on latency, cost and regions and can retry automatically, thereby providing much-needed resiliency.

- Rate limiting. The gateway can control the count of requests, tokens per team per model, etc.

- Guardrails. This is one of the most important features of a gateway — enforcing guardrails such as PII filtering, protection from toxicity and jailbreaks, etc.

- Observability and tracing. Gateway can implement features that log full prompts, user request counts, latency, response time, etc. This will be critical for sizing and scalability.

- MCP and tool integration. Gateway enables the discovery and invocation of enterprise tools such as Jira, collaboration tools, etc.

- A2A protocol support. Support for agent-to-agent protocol enables agents to discover each other’s capabilities, exchange messages, negotiate task delegation, etc.

- Deployment flexibility. A well-designed gateway platform supports seamless deployments across on-premises cloud, public cloud and supports hybrid models, offering a great degree of flexibility.

- Developer sandbox. The gateway capability should include a developer sandbox that allows developers to prototype prompts and test agent workflows. This helps with experimentation as well as a faster path from development to production.

- Other advanced features. Gateway platforms also provide capabilities such as canary testing, batch processing, fine-tuning and pipeline testing.

These capabilities help enterprises not only to address the challenges of building and deploying agentic AI applications at scale but also to maintain and keep the infrastructure up to date.

It’s time for a mature architectural approach

Agentic AI is redefining the automation landscape within enterprises. However, the design and maintenance of these systems pose multi-dimensional challenges and complexity as outlined above. A mature architectural approach that includes a platform-based approach on day one goes a long way in avoiding pitfalls and ensuring scalability and safety. A well-architected LLM gateway is a foundational infrastructure component that helps with orchestration, governance, cost control and security adherence.

Disclaimer: The author is an advisor to Truefoundry.

This article is published as part of the Foundry Expert Contributor Network.

Want to join?